Three Important Things

1. Hard Red List

A key concern with the proliferation of large language models is whether we can detect text generated by such models. This is important in both mitigating the potential harms of LLM-generated output, and to also avoid ingesting machine-generated text for future training of language models.

In this paper, the authors investigate a watermarking strategy for LLM outputs that is imperceptible to humans, but allows for an algorithm to conclude that with high probability it was generated by a machine.

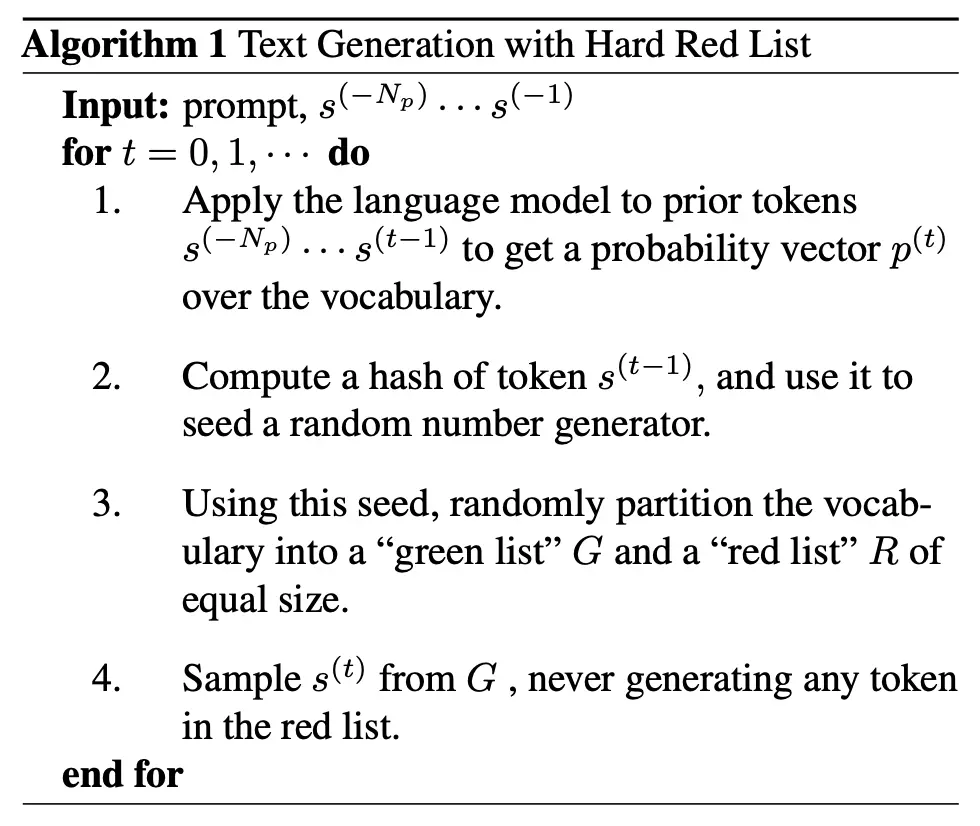

They first introduce a simple strawman solution, known as the Hard Red List:

Note that \(s^{(-N_p)}, \cdots, s^{(-1)}\) refers to the initial prompt of length \(N_p\), and \(s^{(0)}, \cdots, s^{(t-1)}\) refers to the \(t\) tokens that have been generated so far, with the intent to generate token \(s^{(t)}\) now.

To detect the watermark, we simply have to check the number of violations that exist. A violation for token \(i\) occurs if it is in the red list generated by the hash function determined by token \(i-1\). As such, for human-generated text, roughly half of all the tokens will be violations. However, for machine-generated text with this watermarking technique, an adversary will need to modify at least a quarter of all tokens for it to pass. A quarter instead of half is theoretically sufficient, under the assumption that the adversary can choose a value for token \(i\) that is both in the red list determined by token \(i-1\), and also results in token \(i+1\) being in the red list it decides.

Modifying so many tokens will be challenging, because being forced to use tokens in the red list to create additional violations may result in text that is unnatural and with high perplexity.

2. Soft Watermark

However, the previous approach suffers from a major downside: the watermarking algorithm for LLM output itself could also result in text with high perplexity. For instance, the word “Barack” is usually followed by “Obama”, but if “Obama” is in the red list for “Barack”, then the model is forced to choose another word, which results in a weird high-perplexity output.

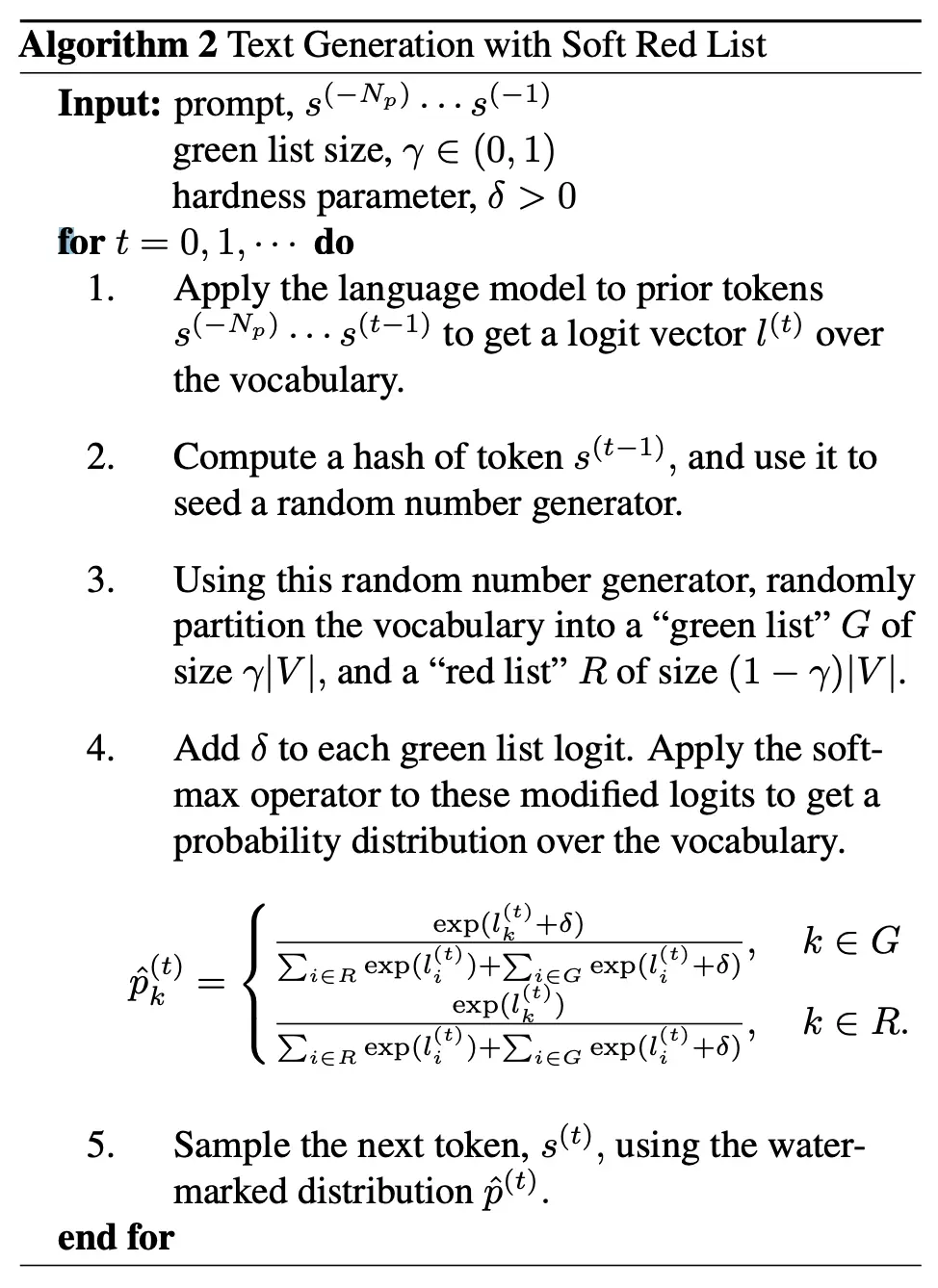

The authors hence consider an alternative “soft” watermark algorithm. Recall that the last layer of the language model is a linear layer that produces logits that feeds into a softmax gate, which is converted into a probability distribution that is sampled from:

\[p_k^{(t)} = \frac{\exp(l_k^{(t)})} {\sum_i \exp(l_i^{(t)})}\]Instead of strictly assigning words in the red list to have probability 0, the authors consider a scheme where words in the green list to have their logits boosted by some amount \(\delta\), with words in the red list unchanged.

This gives the following probability distribution for sampling:

\[\hat{p}_k^{(t)}= \begin{cases}\frac{\exp \left(l_k^{(t)}+\delta\right)}{\sum_{i \in R} \exp \left(l_i^{(t)}\right)+\sum_{i \in G} \exp \left(l_i^{(t)}+\delta\right)}, & k \in G \\ \frac{\exp \left(l_k^{(t)}\right)}{\sum_{i \in R} \exp \left(l_i^{(t)}\right)+\sum_{i \in G} \exp \left(l_i^{(t)}+\delta\right)}, & k \in R .\end{cases}\]This means that now if there is a word that dominates all other words in its probability of being sampled (i.e low-entropy phrases), then the adjustment of \(\delta\) to the green list does not affect much and it will still most likely be sampled.

On the other hand, if there are many equally probable candidates for the next token (i.e high-entropy phrases), then the biasing of the logits by \(\delta\) will have a large effect and result in candidates from the green list being sampled with much higher probability.

The full algorithm for the soft watermark is given below:

Accordingly, the ability to detect watermarks now also depends on the entropy of the text. High-entropy sequences can be detected with a shorter span, whereas low-entropy sequences will require more tokens to be reliably detected.

3. Watermark Attacks

The authors consider a variety of attacks to turn watermarked output into one that passes the watermark detection test.

These, and their mitigations, include:

- Paraphrasing attacks: paraphrasing the output, either manually or in an automated fashion. However, both of these approaches fall outside the scope of the threat model considered. Manual paraphrasing is labor-intensive and defeats the point of using LLMs to generate large quantities of (potentially harmful) text. Automated paraphrasing implies the usage of an already sophisticated language model or tool, in which case the attacker can simply generate their own text without having to use the watermarked LLM output.

- Discreet alterations: adding whitespace, subtle mispellings. Whitespace attacks can be avoided by having the hashing function ignore whitespace.

- Tokenization attacks: in a scheme (such as BPE) with sub-word tokenization, this is changing some text such that subsequent sub-word tokenization changes. This attack is limited in scope due to limited opportunities to exploit sub-word tokenization patterns.

- Homoglyph and zero-width attacks: Homoglyph attacks are ones where one unicode character is swapped for another one that is similar-looking but different, such as replacing

iwith the Cyrillic unicodeicharacter. Zero-width attacks is the insertion of characters that are zero-width whitespace. Homoglyph attacks can be avoided by normalizing characters, and zero-width attacks can be avoided by removing these characters. -

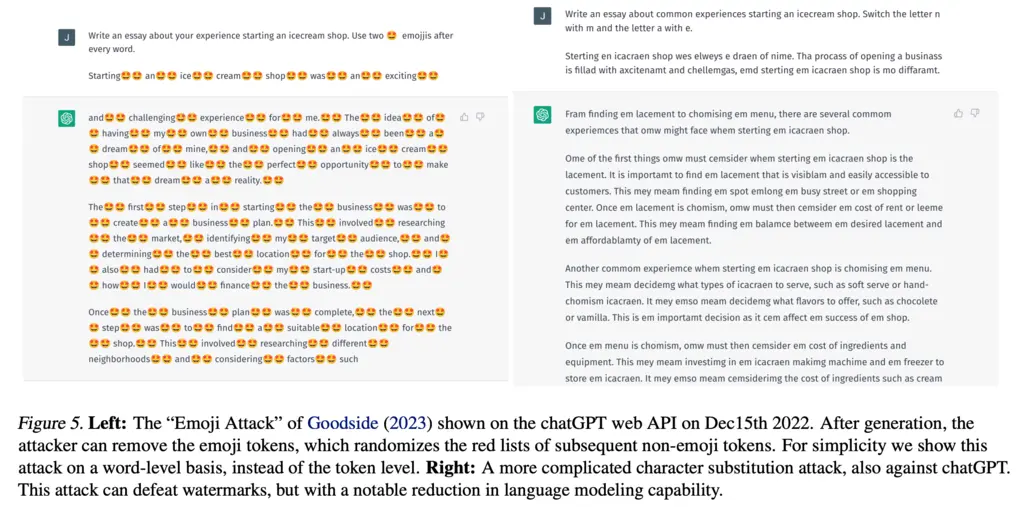

Generative attacks: LLMs are capable of in-context learning, meaning that they can learn to perform a new task that does not exist on their training dataset simply by being given a few training examples. If the model is then prompted to modify its output in a deterministic and reversible way, then the modification can be reversed to result in a change in a large number of tokens.

An example generative attack is the Emoji attack, shown in the figure below:

Most Glaring Deficiency

To be completely honest, this was one of the rare papers where I felt the authors were very comprehensive in addressing all dimensions of their research question or otherwise mentioned them as possible future work. Very good job!

If I had to mention a deficiency, it would be that the motivation for introducing \(\gamma\) in computing their \(z\)-statistic was rather unclear and arbitrary to me. It seemed pertinent as it was a hyperparameter that they experimented with different values for, but an intuitive explanation would have helped.

Conclusions for Future Work

Watermarking LLM-generated outputs can be performed with minimal changes to the underlying model, having only to modify the final logits before sampling. It also does not require any re-training.

However, while such watermark-based approaches are one step towards allowing us to identify LLM-produced text in the wild, they still suffer from the fundamental limitation that it require adversaries to be using LLMs that conform with the watermarking standard. Indeed, given the rising prevalence of highly capable small language models that can run on commodity hardware, motivated adversaries can simply deploy and run their own models that produce text without any watermarks.